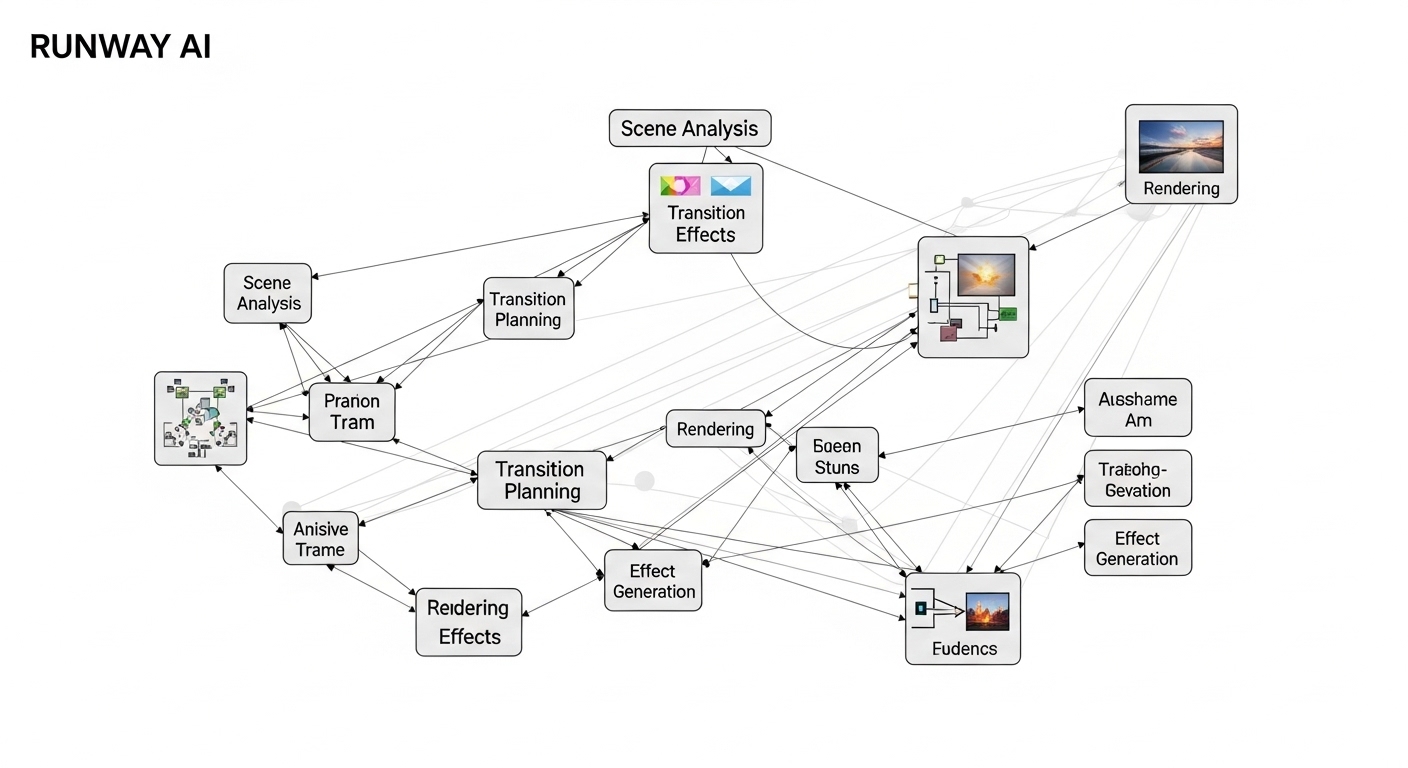

Artificial intelligence is reshaping digital content creation in dramatic ways. Among the most exciting developments is the rise of AI video generation and editing tools — systems that can automatically interpret creative direction and produce visuals with fluid movement, compelling transitions, and sophisticated visual effects. One of the standout platforms in this space is Runway AI, a generative AI creative suite that blends text interpretation, visual synthesis, and editing into a unified workflow. Understanding how Runway AI handles scene transitions and visual effects offers a glimpse into the future of media production — where creators can focus more on storytelling and less on technical complexity.

Before we dive in, it’s also worth noting that invideo has now integrated Runway’s AI capabilities into its editing ecosystem, allowing users to access powerful Runway generation tools directly inside the platform. This makes it easier for marketers, social media creators, and storytellers to create rich visuals without toggling between tools. The runawayAI video integration simplifies adding cinematic transitions and effects into video projects straight within invideo’s editor.

What Makes Runway AI Different

Runway AI is designed as a creative hub that offers more than basic editing — it provides generative tools capable of producing videos, image synthesis, and effects using advanced machine learning architectures. At its core, Runway leverages models like Gen-4 and Gen-4.5, which have been trained on vast datasets to understand not just what objects should appear, but how they should move, interact, and transition from one scene to another.

This means creators can write text descriptions or upload reference materials, and Runway will interpret and translate these inputs into visual sequences with meaningful motion and structure.

Scene Transitions: Beyond Simple Cut Edits

One of the most important aspects of professional video production is scene transitions. Traditionally, editors manually choose cut points and apply transitions — like fades, wipes, or dissolves — to achieve narrative smoothness. Runway AI’s approach to transitions goes deeper than simple effects: it interprets scene structure and temporal relationships.

When generating or editing a video, Runway considers:

- Semantic continuity (whether characters, objects, or settings remain coherent across shots)

- Motion direction (maintaining the sense of movement over time)

- Contextual timing (ensuring transitions reflect story pacing)

- Spatial consistency (keeping objects and environment proportions consistent)

For example, if a prompt describes a character walking from a forest into an urban street, Runway doesn’t just place two unrelated scenes side by side. It analyzes the motion, context, and pacing implied in the text to generate a smoother visual bridge. This may include:

- Gradual lighting changes that reflect the shift from woods to the city

- Camera motion that follows the character’s movement

- Background changes that maintain a coherent spatial flow

These kinds of transitions help the viewer remain immersed in the story, and they are often far more intuitive than standard manual edits.

Integration With Invideo: A Combined Workflow

As noted earlier, invideo has integrated Runway AI generation into its editor, allowing users to access powerful AI features — like scene transitions and visual effects — directly within their existing workflow. This combined approach means content creators don’t need to export clips across platforms or deal with separate tools; everything is done within one interface.

Such integration brings major practical benefits:

- Seamless generation and editing: Create AI-produced scenes, then refine them in invideo

- Template support: Use Runway’s output with customizable invideo templates

- Enhanced effects: Layer AI-generated effects with traditional editing elements

- Time savings: Reduce turnaround from idea to publish-ready content

By uniting generative AI with a flexible editor, creators gain both speed and control — a balance often missing in older tools.

Visual Effects With Generative Intelligence

Visual effects (VFX) traditionally require deep technical expertise. Artists spend hours crafting particle effects, lighting adjustments, background replacements, and compositing. Runway AI is changing this by using generative models that interpret prompts and apply visual effects with minimal manual interaction.

Here’s how it approaches visual effects:

1. AI-Driven Predictive Effects

Runway uses learned patterns from high-quality footage to apply realistic effects automatically. These can include:

- Smoke, fire, or atmospheric effects

- Lighting enhancements and shading adjustments

- Background rendering with consistent textures

Unlike preset templates, these effects are generated with context — meaning they align with the scene’s narrative and visual style.

2. Text-Prompted Effects

Creators can describe the impact they want, and Runway generates effects accordingly. For example, a prompt like “Add slow-motion water droplets with soft light refraction” results in an effect tailored to that description instead of a generic preset.

3. Integrated Editing Tools

Runway doesn’t force creators to leave for external applications. Its editing suite includes tools for repositioning effects, refining motion tracking, and adjusting timing — all powered by AI that anticipates creative intent.

The Role of AI Models Under the Hood

Runway’s ability to handle scene transitions and visual effects is powered by deep learning models such as Gen-4 and Gen-4.5. These models were designed for flexible creative input and high visual fidelity, allowing them to generate and edit video content that can sit alongside live-action or animated footage.

What makes these models powerful is not just motion generation, but their ability to adhere to contextual prompts — meaning they can interpret a variety of descriptive cues such as:

- Environment descriptors (e.g., “sunset beach with crashing waves”)

- Action cues (e.g., “camera slowly zooms in on subject”)

- Artistic direction (e.g., “cinematic, warm tones with shallow depth of field”)

The AI treats transitions and effects as part of the creative narrative, not just as added overlays. This allows for results that feel more intentional and integrated.

Real-World Use Cases

The way Runway AI handles transitions and effects has practical applications across multiple domains:

Marketing and Ads

Brands can automatically generate cinematic transitions that match campaign tones. Instead of hiring an editor for every variation, teams can quickly produce several ad versions with different visual styles.

Storytelling and Narrative Videos

Content creators can focus on narrative direction rather than editing logistics. By describing how scenes should flow, they get sequences that feel more cohesive.

Social Media Content Production

For short-form platforms like Instagram, TikTok, and YouTube Shorts, smooth transitions and quick visual effects can drive higher engagement. AI also helps in outputting multiple versions optimized for different platforms.

Educational and Instructional Content

Visual effects like animated overlays or dynamic transitions help make complex information more digestible, improving viewer retention and comprehension.

Challenges and Future Outlook

While Runway AI is capable, it’s not perfect. Some effects may still require manual fine-tuning, especially in scenes needing very specific artistic nuances. Additionally, AI models can occasionally produce outputs that don’t perfectly match highly detailed or ambiguous prompts.

However, the future looks promising. Continuous advancements — such as more sophisticated motion modeling, deeper text understanding, and tighter creative controls — are making AI a true partner in video production. Experts predict that future models will further reduce the gap between human-directed cinematography and AI-assisted generation.

Conclusion

AI tools like Runway are transforming how creators handle complex tasks like scene transitions and visual effects. By interpreting text, synthesizing motion, and generating effects that align with narrative context, Runway bridges the gap between conceptual storytelling and polished visual output.

When combined with platforms like invideo through the integrated runawayAI video workflow, creators get a unified environment where ideas flow seamlessly from prompt to finished video. This integration demonstrates how AI is not merely automating tasks but elevating creative possibilities — helping storytellers, marketers, and visionaries focus on what matters most: impactful narratives.